Wouldn’t it be incredible to control a robot with your mind? To have some machine read your thoughts and do whatever you want it to do without even lifting a finger? For the non-disabled, such a dream is about making life easier—adding another layer of convenience to daily tasks like sending texts or emails. For those who are disabled, such a dream is about regaining, or developing for the first time, what many of us define as basic human functions—walking, holding a cup of coffee, seeing a friend’s smile, hearing a loved one’s laugh.

Once merely an idea of sci-fi entertainment, the ability to control robots with one’s brain is becoming a tangible reality. Last month, Neuralink, a neurotech company founded in 2016 by Elon Musk, released a video showing a monkey playing Pong via mind control. Pager, the macaque, had Link, a coin-sized wireless device with flexible wire electrodes implanted in the arm- and hand-controlling areas of his motor cortex. After being implanted, Pager practiced playing Pong with a joystick while recordings were made of his motor cortex neurons. A machine learning-based decoder then read out Pager’s neural activity, correlating it with specific joystick movements. Next, the joystick was unplugged, and Pager’s decoded neural activity was used to play Pong. While Neuralink is definitely pushing the envelope on what neurotechnology may soon become available to the public, their work is a culmination of previous and ongoing research in neuroscience and robotics.

In the past two decades, the advancement of Brain Machine Interfaces (BMIs, also known as Brain Computer Interfaces) has accelerated. (BMIs encompass many functional roles, including hearing aids, visual neural prostheses, and even deep brain stimulation for psychiatric and neurological disorders.) These devices work via important principles of systems neuroscience. First, consider that neurons “talk” to one another using electrical signals: when a neuron receives enough stimulation, ion channels in the neuron’s cell membrane open up, allowing the neuron to fire an action potential or to “spike”. This “spike” is an all-or-nothing signal that travels from one neuron to another in a neural circuit. In simpler animals, like the fruit fly Drosophila melanogaster, a single neuron’s activity, or amount of spiking, can result in great behavioral changes. However, studies have demonstrated that movement predictions in animals with more neurons, like monkeys and humans, are most accurate when based on the activity of tens of motor cortex neurons, (Georgopoulos et al., 1986; Moran & Schwartz, 1999; Nicoleis & Lebedev, 2009). In short, generating a simple movement, like reaching for your cup of coffee and grasping it, actually requires the combined activities of populations of neurons, cascading from your motor cortex, through your spinal cord, and out to the muscles in your hand and arm.

Even at their simplest, BMIs must accomplish a few major and impressive tasks: 1. Read out spikes from multiple neurons. 2. Decode what the combined activity means. 3. Transform the decoded activity into controlled movements. BMIs have been able to perform the first two tasks of recording and decoding neural activity on a fundamental level since the mid ‘80s (Georgopoulos et al., 1986). Many initial BMIs, however, did not control robotic limbs, but rather, cursors moving in 2D on a computer screen. It wasn’t until the year 2000 that BMIs and robotics merged in the non-human primate for the first time: Wessberg and colleagues used monkey motor cortex activity to control a robotic arm’s 3D movements (Wessberg et al., 2000). Such robotic control from brain activity was then implemented in human subjects as early as 2006 (Hochberg et al., 2006). But even without the concern of controlling a robot, the basic BMI tasks of reading and decoding neural activity data are complex and still being perfected to accommodate the limitations of biology, data science, and electrical engineering.

A few major biological issues have made BMI development difficult. For one, most devices used for recording from neurons directly are stiff and relatively bulky like Neuropixel, while the brain is elastic and full of important vasculature. Such features can act as challenges, particularly because as the brain moves and deforms due to body movement, or as scar tissue forms around the insertion site, BMI recording probes might lose track of the neurons they started off recording (Moran, 2010). This is one new advantage of the Link: its recording device offers comparable recording density to Neuropixel, but is composed of flexible wires that are implanted via a robot that can detect brain vasculature and avoid it (though no solution for scar tissue formation has been proposed by Neuralink) (Musk & Neuralink, 2019). Can any BMI recording technologies overcome these challenges completely? Many researchers are working on developing effective BMIs that do not insert into the brain at all, but rather, read out neural activity in other ways. Other less invasive approaches broadly measure electrical activity at the brain’s surface (electrocorticography) or measure the ratio of oxygenated to deoxygenated blood as a proxy for brain activity (functional near infrared spectroscopy) (Moran, 2010; Hong et al., 2020). While noninvasive techniques could be more approachable for patients seeking to use BMIs, they currently lag in decoding accuracy primarily due to decreased spatial and temporal resolution of recording techniques (Moran, 2010; Hong et al., 2020). Essentially, these techniques take more of an “average” of activity, rather than looking at the finer details.

In addition to these biomechanical issues, neural activity must be reliably decoded by BMIs to uncover the patient’s intended movements. Such decoding in neuroscience experiments usually involves wired transfer of neural activity to a computer, followed by offline signal processing that can be very slow. This process involves the removal of electronic signals from the patient’s environment and the detection of spikes from individual neurons in the brain. These isolated spike data must then be correlated to specific movement trajectories or intended movements via a patient and computer training process. Key goals in ongoing BMI development have been wireless transfer and rapid spike detection and denoising of neural data to ease daily BMI use and support rapid robotic control with neural activity, respectively. Just before the press release demonstrating Pager’s wireless MindPong performance, work from a neural prostheses-focused academic collaboration, BrainGate, demonstrated successful implantation and control of a computer cursor from a wireless BMI device in a human patient, a huge hurdle for the use of BMIs outside of a lab setting (Simeral et al., 2021). As far as the actual decoding from neural activity into movement, data scientists have developed multiple neural network and machine learning-based approaches to map spikes to intended movements reliably over time (e.g. Sussillo et al., 2016; Degenhart et al., 2020). It is unclear what computational techniques Neuralink’s “custom algorithms” use (Musk & Neuralink, 2019).

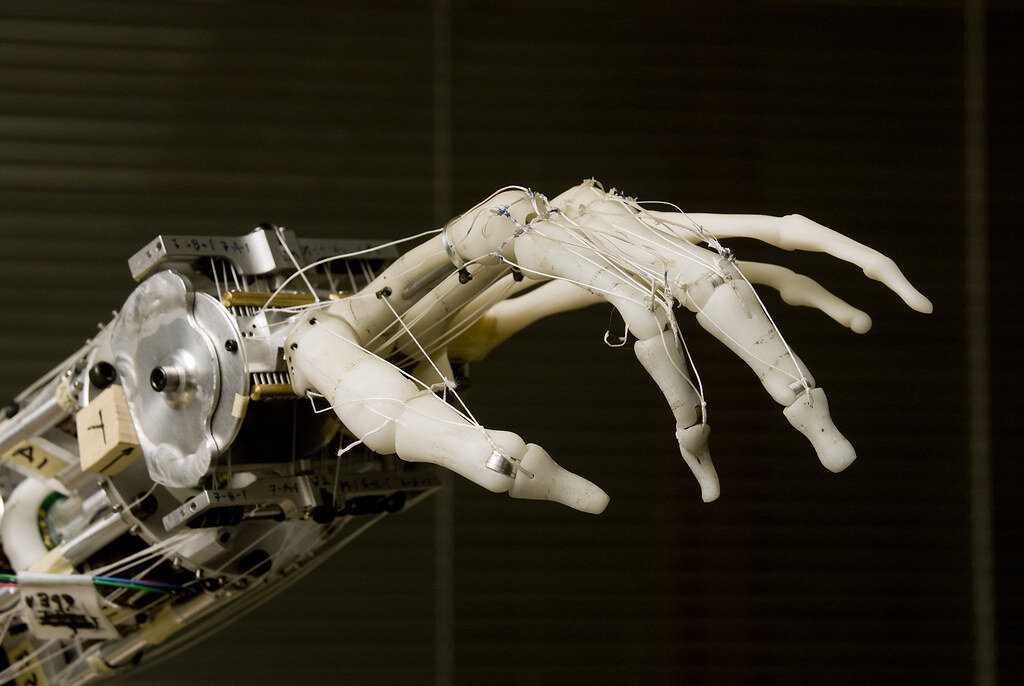

A robotic hand designed by the Neurobotics Lab at University of Washington.

Beyond these considerations for generating reliable movement from brain activity, researchers are also working to develop prosthetics that provide realistic sensory feedback to the prosthetic users. Imagine the difficulty of discriminating between your phone, keys, and chapstick when reaching into your bag. Such a task requires tactile information about those objects’ shapes, sizes, and textures. Recent work from The Biorobotics Institute in Italy has demonstrated the successful implementation of robotic arms that can indeed sense tactile features: discriminating among object shapes and stiffness, as well as different textures of paper, rubber, PVC, velcro and wood (Raspopovic et al., 2014; Mazzoni et al., 2020). Other studies have also developed means of sensory feedback for motor control, substantially aiding in the ease of desired movement performance (Mazzoni et al., 2020; Lienkamper et al., 2021). By generating neural prosthetics with both sensory and motor connections, neurotechnology can truly bridge the gap between the disabled and non-disabled.

So how does the excitement of a monkey playing Pong with its mind tie into all these advances? Isn’t that old news in the field of BMIs? And what’s next in the field of BMIs? The academic field of BMI research is still working on achieving stable, long-term motor rehabilitation with increasing movement complexity in people with paralysis through efforts like BrainGate. Neuralink is simply adding power, speed, and publicity toward improving and easing access to BMI technology. Neuralink plans to start human trials later this year, making it the first robotic neurosurgery-based implantation of a neural recording device with such a high data recording and processing capacity in humans (Musk & Neuralink, 2019). Elon Musk has touted the advancement of BMIs as a step towards rehabilitation for the disabled and for greater convenience for the non-disabled, promoting the idea of a future where users can perform tasks on their iPhones without even lifting a finger. Following this step, Musk and Neuralink hope to use Link for movement restoration in patients with paralysis. While ethical concerns of equal access to such neurotechnology could be cause for alarm, one thing is for certain: companies like Neuralink can help innovate BMI technology and expedite the process of making such technology a widely available resource for those in need.

Edited by Shira Redlich

References:

Degenhart, A.D., et al. “Stabilization of a brain–computer interface via the alignment of low-dimensional spaces of neural activity.” Nat Biomed Eng, vol. 4, 2020. doi: 10.1038/s41551-020-0542-9.

Georgopoulos, A., et al. “Neuronal Population Coding of Movement Direction.” Science, vol. 233, no. 4771, 1986, doi:10.1126/science.3749885.

Hochberg, L., et al. “Neuronal ensemble control of prosthetic devices by a human with tetraplegia.” Nature, vol. 442, 2006. doi: 10.1038/nature04970.

Hong, KS., et al. “Brain–machine interfaces using functional near-infrared spectroscopy: a review.” Artif Life Robotics, vol. 25, 2020. doi: 10.1007/s10015-020-00592-9.

Lienkämper, Robin et al. “Quantifying the alignment error and the effect of incomplete somatosensory feedback on motor performance in a virtual brain-computer-interface setup.” Scientific reports, vol. 11, 2021. doi:10.1038/s41598-021-84288-5.

Mazzoni, A., et al. “Morphological Neural Computation Restores Discrimination of Naturalistic Textures in Trans-radial Amputees.” Sci Rep, vol. 10, 2020. doi: 10.1038/s41598-020-57454-4.

Moran, D. “Evolution of brain-computer interface: action potentials, local field potentials and electrocorticograms.” Curr Opin Neurobiol, vol. 20, 2010. doi: 10.1016/j.conb.2010.09.010.

Moran, D., & Schwartz, A. “Motor cortical representation of speed and direction during reaching.” J Neurophysiol, vol. 82, no. 2676, 1999, doi: 10.1152/jn.1999.82.5.2676.

Musk, E., & Neuralink. “An integrated brain-machine interface platform with thousands of channels.” bioRxiv. doi: 10.1101/703801.

Nicolelis MA, & Lebedev MA. “Principles of neural ensemble physiology underlying the operation of brain-machine interfaces.” Nat Rev Neurosci, vol. 10, 2009, doi: 10.1038/nrn2653.

Raspopovic, S., et al. “Restoring Natural Sensory Feedback in Real-Time Bidirectional Hand Prostheses.” Sci Transl Med, vol. 6, 2014. doi: 10.1126/scitranslmed.3006820.

Simeral JD, et al. “Home Use of a Percutaneous Wireless Intracortical Brain-Computer Interface by Individuals With Tetraplegia.” IEEE Trans Biomed Eng, 2021. doi: 10.1109/TBME.2021.3069119.

Sussillo, D., et al. “Making brain–machine interfaces robust to future neural variability.” Nat Commun, vol. 7, 2016. doi: 10.1038/ncomms13749.

Wessberg, J., et al. “Real-time prediction of hand trajectory by ensembles of cortical neurons in primates.” Nature, vol. 408, 2000. doi: 10.1038/35042582.